Empowering human security control room operators with meaningful real-time alerts

iSentry is a full spectrum AI Analysis platform for real-time monitoring of video surveillance imagery, focused on dealing with a wide range of complex, live video environments.

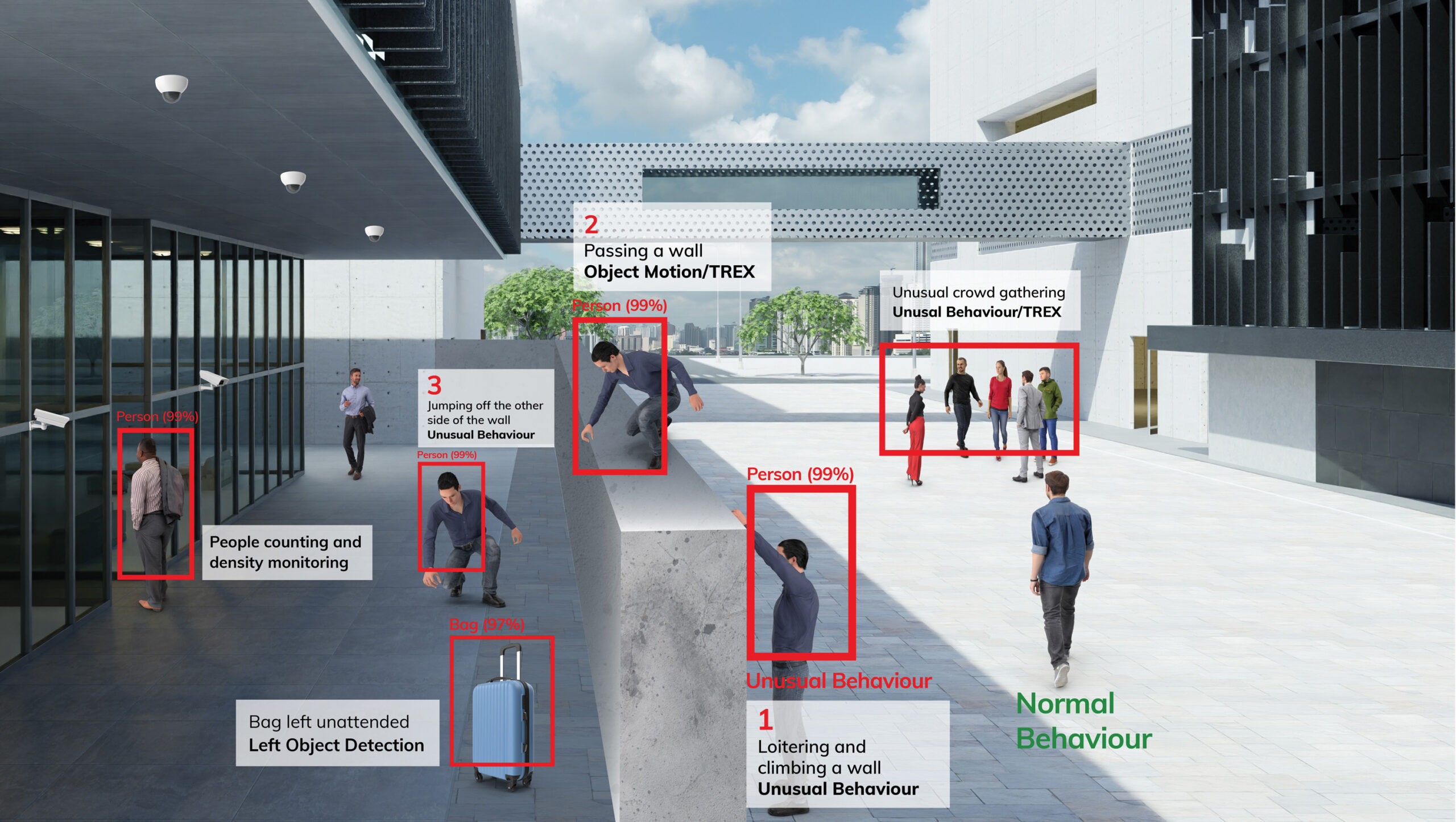

Our unsupervised, self-learning networks extract relevant data from imagery through behavioural anomaly detection (Unusual Behaviour) in fluid, busy environments, or advanced motion analysis (TREX) in more static surroundings where intrusion detection is required. These alerts are then classified for better contextualization and sent to a powerful rules engine which automates part of the decision-making process.

Put simply, iSentry monitors massive volumes of CCTV footage and alerts control room when it observes something out of the ordinary.

iSentry delivers financial return on existing CCTV surveillance infrastructure that is normally only used for forensic analysis of past incidents by providing real-time analysis and response capabilities immediately.

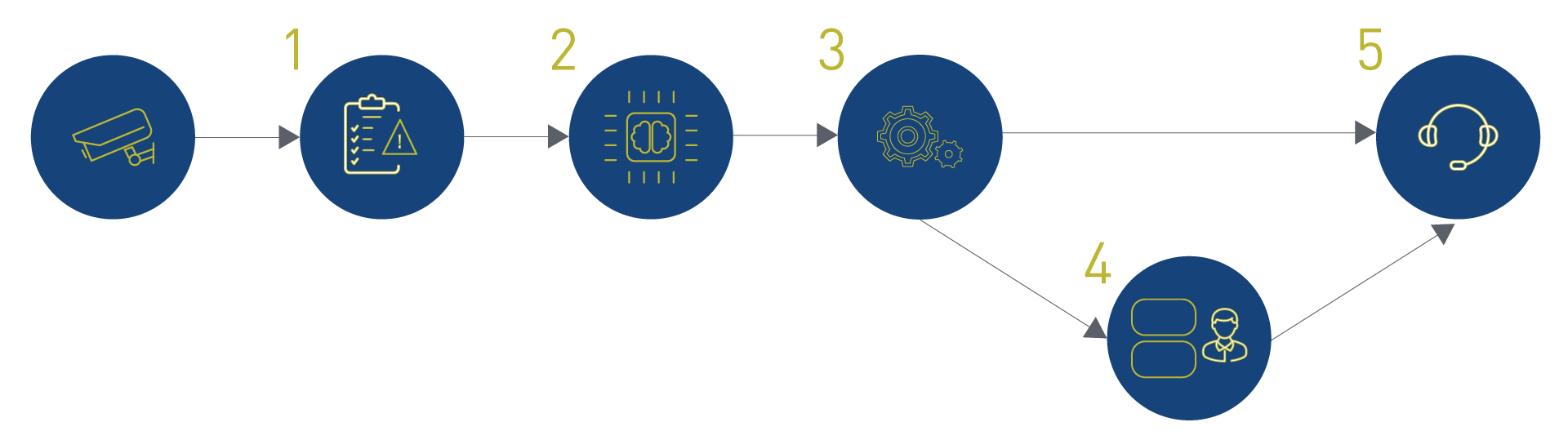

iSentry: How it works

1. DECODING AND ANALYSIS

iSentry efficiently decodes multiple video streams in real time which are then analysed by one or more of the analytics based on Artificial Intelligence, resulting in an “alert”, which will then start the iSentry process.

2. DATA ENRICHMENT

A certain number of detected frames, extracted from the alert video, are analysed by a GPU-based Deep Learning server. This process maximises processing efficiency and gives the system a much greater understanding of the alert.

3. AUTOMATION

With the greater understanding gained from step 2, the system will automatically dismiss many alerts.

4. LIVE ALERTS

(THE HUMAN COMPONENT)

Alerts that are not automatically elevated to alarm in step 3 are presented to the operator as a list of current alerts each containing classified images and a +/- 5-10 second video clip. The operator then decides whether an alert is important (escalating to alarm) or not, thus eliminating most false positives.

5. ALARMS AND ESCALATIONS

(TAKING ACTION)

Once an alarm is generated either automatically by step 3 or by the human in step 4 the iSentry process is complete. All data associated with the alarm including video, classifications, metadata, and operator input, is included with the alarm to be processed.

Our Integration Partners

Our Technical Partners

Core capabilities

Key enhancements allow the use of Open VINO for integrated Deep Learning, as well as hardware acceleration, and suitable HD graphics or dedicated GPU decoding hardware to supplement CPU processing. This results in the ability to manage hundreds of video analytics channels on a single workstation or enterprise server that delivers quicker response times and reduced anomalies.

Detecting Unusual Behaviour

This is the most important iSentry algorithm. Detection of Unusual Behaviour is driven by an unsupervised AI platform. AI learning is based on pixel analysis. The system ‘learns’ how objects normally move in a scene; after establishing a norm, the system creates an alert on any deviations. The amount of video to be analysed by a monitoring control centre operator is reduced by up to 98%. A single Unusual Behaviour license often replaces 5-10 licenses based on Rules Based algorithms.

Related applications:

Left and missing object detection, tripwire trespassing, directional getaway violation, people and object counting, unusual sound detection, trip & fall, smoke detection, etc.

Threat Ranking and Extraction (TREX)

The iSentry Platform is exceptional at target acquisition thanks to its ability to learn the environment covered by cameras. iSentry accurately extracts true target information even under the most challenging of environmental conditions. It can distinguish between moving foliage and true targets so that it delivers minimal false negatives. Additionally, its proprietary neural networks perform industry leading visual or thermal camera object classification.

The platform provides expert configuration ability that eliminates environmental noise. Its multi-scene analysis detects small targets at great distance as well as accurate human tracking and classification at short distance, simultaneously on the same view. iSentry is also capable of running on PTZ cameras, providing autonomous scene stability tools and alert suppression during camera movement from a pre-set to the next position.

Related applications:

Perimeter and area surveillance, intrusion protection, border control etc.

DeFence

DeFence is able to detect and track very fast moving, as well as very small objects. This is a vital solution, for example, for detecting objects thrown over fences or walls, such as bombs, cell phones, etc. It is also used to eliminate infrared beam detectors along walls or fences, since it can detect intrusions on narrow strips even of only a few centimetres.

Left Object Detection

Left Object Detection will create an alert when an object enters a scene and remains stationary for more than a predefined amount of time or if an object is removed from a scene.

Video Tripwire (indoor)

For sterile environments or specific areas of a video scene, iSentry has easy-to-use, multi-directional video tripwires to alert on all moving objects within the specified area that cross a defined line.

Reliable Information – Data Enrichment

Applying more than a dozen Deep Learning neural networks, iSentry can enrich video analysis with detailed information on alerts generated by the underlying core analytics.

The Deep Learning engine recognises multiple classes of objects, even with difficult camera angles and over large distances. Current networks are constantly improved, new specialised networks and targets are developed such as fire detection, compliance detection of facemasks or personal protective equipment and human posture analysis.

Virtual Operation – Automation

The iSentry logic engine plays the role of a video surveillance operator and as such can decide for itself whether an alert should be identified as an alarm or be ignored. This decision is based on factors such as the number and combination of object types that trigger the alert, the time of day and object size, or even the likelihood of accurate classification.

Additional Business Intelligence (BI) reports complement real-time analysis with KPIs for managerial purposes and monitoring trends.

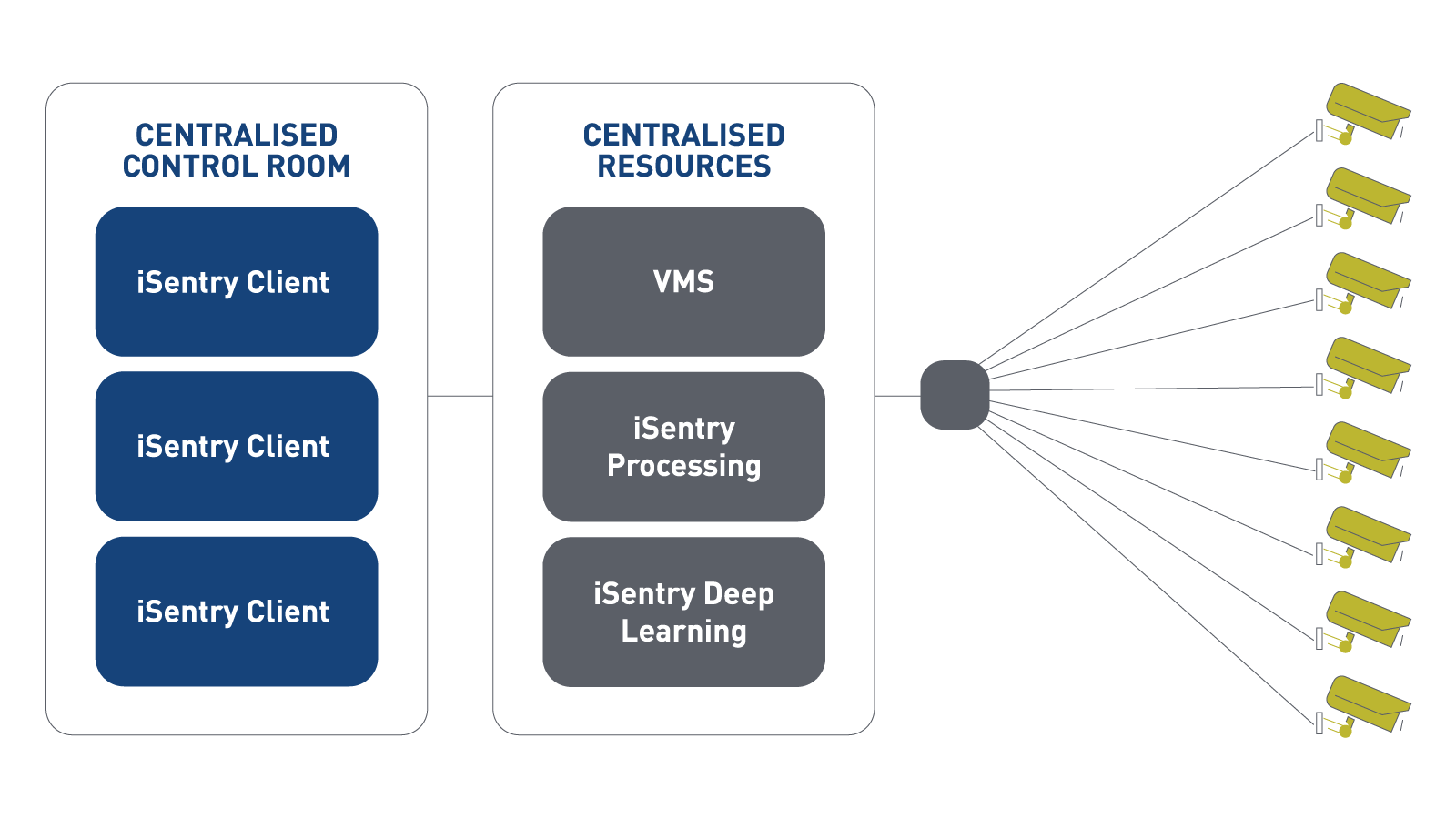

Flexible Architecture

Centralised Architecture

iSentry’s Centralised Architecture has the advantage of low complexity taking advantage of economies of scale. In the case of a network containing many cameras, it could require extra bandwidth to ensure centralisation of all video in a single central location.

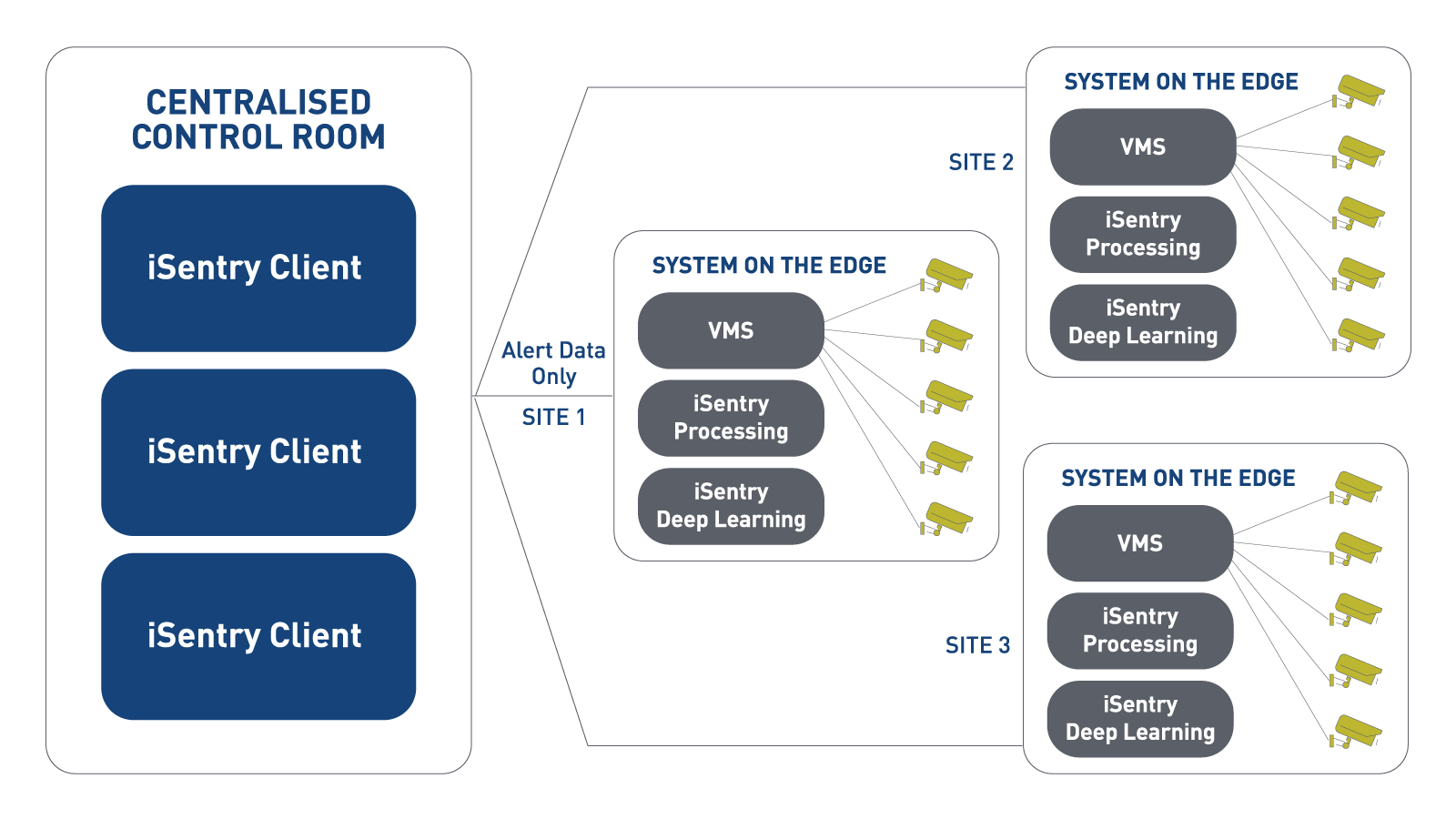

Distributed Architecture

Distributed Architecture is suitable for a central control room monitoring several small and large distributed sites. It is not limited in terms of camera numbers with the entire processing requirement handled on site. Sites become fully autonomous, each with the capacity for their own control room, with records and data stored on site. Only alert data is transmitted to the central control room and therefore bandwidth usage is limited to just the alert and video data for each alert.

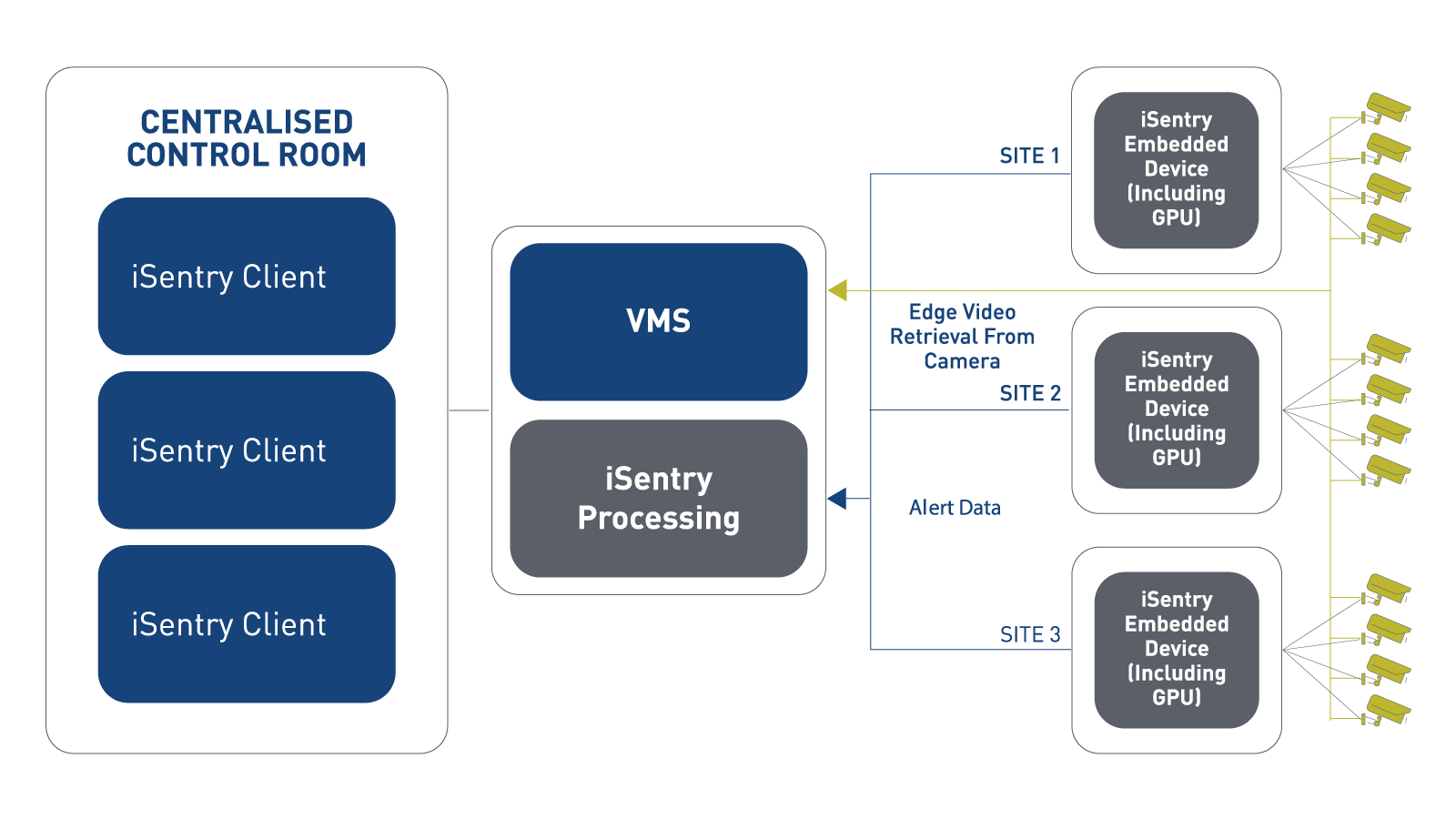

Complete Edge Architecture

Micro embedded device with GPU

This architecture takes advantage of a central Video Monitoring System (VMS) with distributed processing while limiting the bandwidth required to stream live video. All iSentry processing is done on the embedded edge device (such as an NVIDIA Jetson nano), including Deep Learning, importing live video from the cameras and then sending only alert data and alert video to the control room central location.

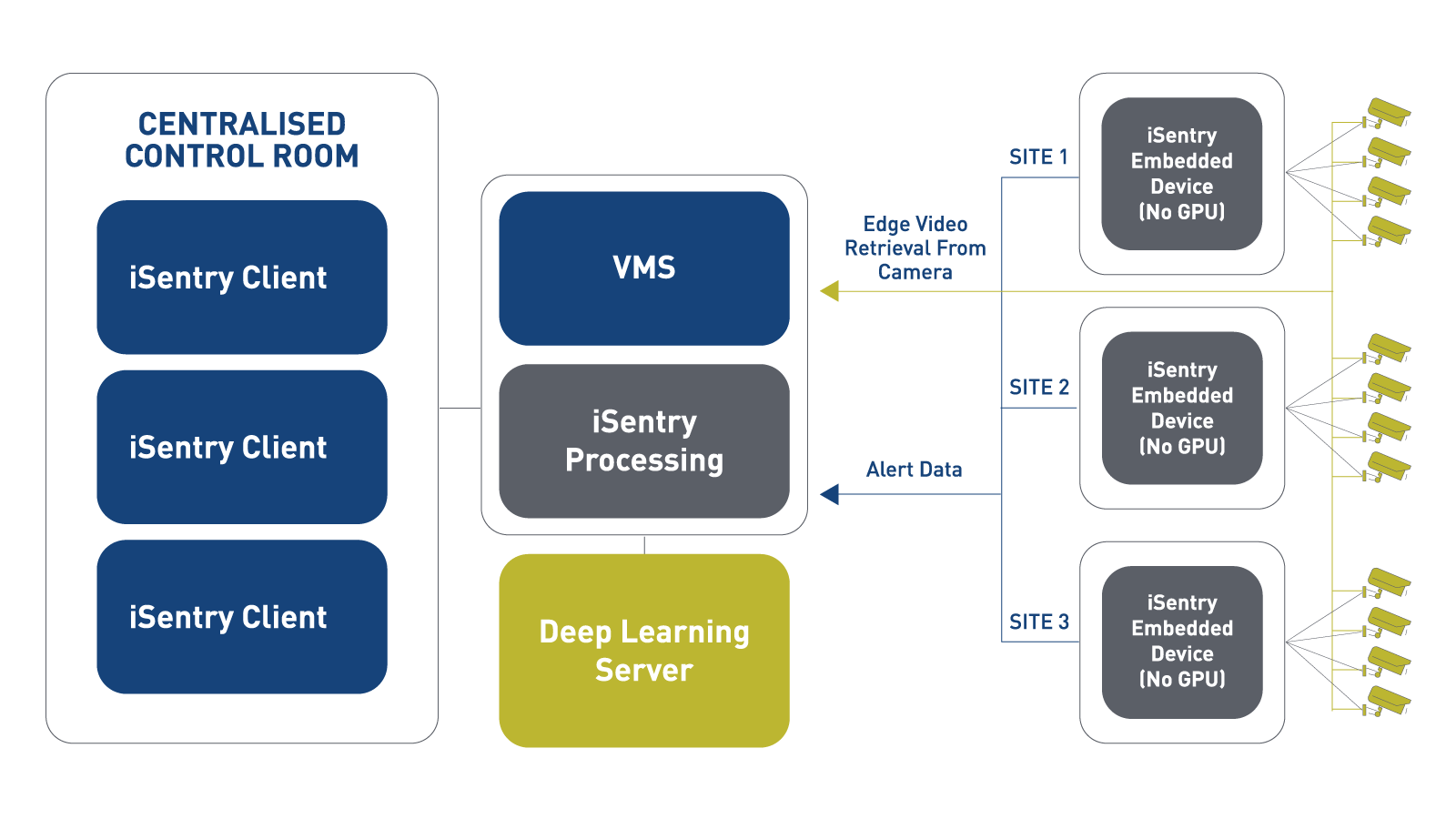

Partial Edge Architecture

Micro embedded device without GPU

Only the first processing layer of iSentry is managed on the edge device (such as a Raspberry Pi) while subsequent Deep Learning and Rules Engine processing layers are managed centrally. The advantages of this architecture are that Deep Learning processing can be a fully shared resource in the Control Center and that a wider range of embedded devices are supported.

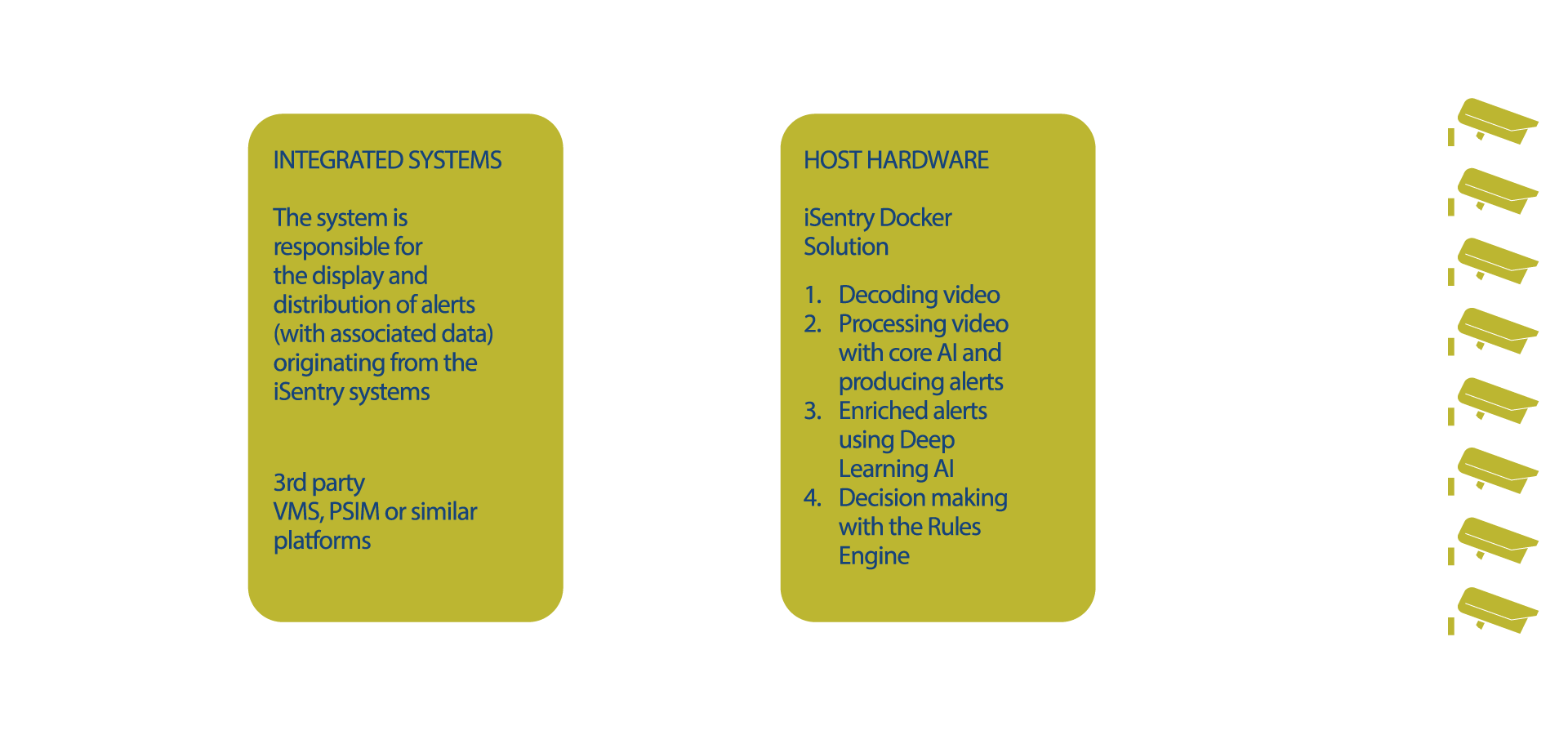

Integration Options

Integration partners

Integration

- DIGIFORT

- EVALINK

- GENETEC

- IMMIX

- MANITOU

- MILESTONE

- PROOF360

- RAMSYS

- SBN

- VISETEC

On-Camera Integration

- MOBOTIX

Application Integration

- SAFR

Technical Partners

- NVIDIA

- INTEL